Heavily fragmented indexes can degrade query and crawl performance.

Cause :

Fragmentation exists when indexes have pages in which the logical ordering, based on the key value, does not match the physical ordering inside the data file. All leaf pages of an index contain pointers to the next and the previous pages in the index. This forms a doubly linked list of all index/data pages. Ideally, the physical order of the pages in the data file should match the logical ordering. Overall disk throughput is significantly increased when the physical ordering matches the logical ordering of the data.

Effects on Performance :

1. Query latency might increase.

2. Crawl rate may decrease as more time is spent writing metadata to the property database.

3. The property database defragmentation health rule detects that one or more property databases have fragmented indexes and attempts to correct the fragmentation. Note: when this rule is correcting the fragmentation, query latency and crawl rate are affected.

If you see a message like "Search - One or more property databases have fragmented indices." in Central Administration > Monitoring > Review Problems and Solutions

One of the possible Resolution is :

1.Go to Central Administration > Manage service applications > Search Application > Index reset in Left navigation > Reset Now

2.Go to Central Administration > Manage service applications > Search Application > Manage Content Sources > Sart Full Crawls for Content sources in the order of importance as per you business needs.

Reference : One or more Search property databases have fragmented indices (SharePoint Server 2010)

Monday, June 10, 2013

Saturday, June 8, 2013

Best practices for Blob cache

Blob cache is to reduce traffic in b/w sql server and front ends. So a copy of frequently accessed files is kept on each WFE. Enabling blob cache also passes information in http headers to client browser to fetch the data from cache rather than round trips.

This needs publishing framework. You might have to increase RAM on each front end on average by 800 bytes per file. Also, server relative urls should be less than 160 characters to be blob cached (with a path in file system as less than 260 characters)

But sometimes large blob cache will reduce your performance rather helping with heavy loads. Blob cache index are written to hard drive periodically and before recycle. When this serialization is progress, it will affect the time taken to serve the client request.

It is even more devastating when app pool recycle corrupt indexes and files are no longer being fetched from blob cache ; this happens generally because - if timeout for recycle is lower than time taken to save the indexes on disk

Best practices for Blob cache:

1. Try to keep the content stable for live environments and blob cache should be planned for content with lesser changes, when blob is invalidated by Framework?

2. Better to add mime types for files being blob cached. Alternate in webconfig [ BlobCache node] use parameter browserFileHandling = "nosniff" [ possible values nosniff|strict ]

3. The content in blobcache folder should be managed by framework. Let it be created and managed by SharePoint.

4. Server relative urls should be less than 160 characters to be blob cached ( with a path in file system as less than 260 characters)

5. How to flush blob cache ?

6. For large blob cache increase parameter WriteIndexInterval in webconfig [ BlobCache node]. Default is 60 . You might want to serialize it may after 1 hour [ 86400] for very large blob cache. { this setting is not recommended for small blob cache size }

7. Recommended time limits to shut down and start up web application is 300 seconds. Default is 90 seconds. For large blob cache you might even go up to 600 seconds.

8. Plan for sufficient disk space as per size of content.

You may also like:

Include specific folder content in BlobCache SharePoint

when blob cache must be invalidated by framework ?

System.UnauthorizedAccessException to BlobCache Folder

Getting multiple hits to files after enabling BLOB cache

This needs publishing framework. You might have to increase RAM on each front end on average by 800 bytes per file. Also, server relative urls should be less than 160 characters to be blob cached (with a path in file system as less than 260 characters)

But sometimes large blob cache will reduce your performance rather helping with heavy loads. Blob cache index are written to hard drive periodically and before recycle. When this serialization is progress, it will affect the time taken to serve the client request.

It is even more devastating when app pool recycle corrupt indexes and files are no longer being fetched from blob cache ; this happens generally because - if timeout for recycle is lower than time taken to save the indexes on disk

Best practices for Blob cache:

1. Try to keep the content stable for live environments and blob cache should be planned for content with lesser changes, when blob is invalidated by Framework?

2. Better to add mime types for files being blob cached. Alternate in webconfig [ BlobCache node] use parameter browserFileHandling = "nosniff" [ possible values nosniff|strict ]

3. The content in blobcache folder should be managed by framework. Let it be created and managed by SharePoint.

4. Server relative urls should be less than 160 characters to be blob cached ( with a path in file system as less than 260 characters)

5. How to flush blob cache ?

6. For large blob cache increase parameter WriteIndexInterval in webconfig [ BlobCache node]. Default is 60 . You might want to serialize it may after 1 hour [ 86400] for very large blob cache. { this setting is not recommended for small blob cache size }

7. Recommended time limits to shut down and start up web application is 300 seconds. Default is 90 seconds. For large blob cache you might even go up to 600 seconds.

8. Plan for sufficient disk space as per size of content.

You may also like:

Include specific folder content in BlobCache SharePoint

when blob cache must be invalidated by framework ?

System.UnauthorizedAccessException to BlobCache Folder

Getting multiple hits to files after enabling BLOB cache

One thought on “Best practices for Blob cache”

I am getting below mentioned error in Microsoft.SharePoint.Publishing.BlobCache.CreateFile(String tempFileName, SPFile file, BlobCacheEntry target, Int32 chunkSize) :

Process : w3wp.exe (_x-_-_)

Thread ID : _x-_-_

Area : SharePoint Foundation

Category : Performance

Event ID : n-_-

Level : Monitorable

Thread ID : _x-_-_

Area : SharePoint Foundation

Category : Performance

Event ID : n-_-

Level : Monitorable

Message :

An SPRequest object was reclaimed by the garbage collector instead of being explicitly freed. To avoid wasting system resources, dispose of this object or its parent (such as an SPSite or SPWeb) as soon as you are done using it. Allocation Id: {xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxx} This SPRequest was allocated at at Microsoft.SharePoint.Library.SPRequest..ctor() at Microsoft.SharePoint.SPGlobal.CreateSPRequestAndSetIdentity(SPSite site, String name, Boolean bNotGlobalAdminCode, String strUrl, Boolean bNotAddToContext, Byte[] UserToken, String userName, Boolean bIgnoreTokenTimeout, Boolean bAsAnonymous) at Microsoft.SharePoint.SPWeb.InitializeSPRequest() at Microsoft.SharePoint.SPListCollection.EnsureListsData(Guid webId, String strListName) at Microsoft.SharePoint.SPListCollection.ItemByInternalName(String strInternalName, Boolean bThrowException) at Microsoft.SharePoint.SPItemEventProperties.get_List() at Microsoft.SharePoint.Taxonomy.TaxonomyItemEventReceiver.ItemUpdating(SPItemEventProperties properties) at Microsoft.SharePoint.SPEventManager.RunItemEventReceiver(SPItemEventReceiver receiver, SPUserCodeInfo userCodeInfo, SPItemEventProperties properties, SPEventContext context, String receiverData) at Microsoft.SharePoint.SPEventManager.RunItemEventReceiverHelper(Object receiver, SPUserCodeInfo userCodeInfo, Object properties, SPEventContext context, String receiverData) at Microsoft.SharePoint.SPEventManager.c__DisplayClassc`1.b__6() at Microsoft.SharePoint.SPSecurity.RunAsUser(SPUserToken userToken, Boolean bResetContext, WaitCallback code, Object param) at Microsoft.SharePoint.SPEventManager.InvokeEventReceivers[ReceiverType](SPUserToken userToken, Guid tranLockerId, RunEventReceiver runEventReceiver, Object receivers, Object properties, Boolean checkCancel) at Microsoft.SharePoint.SPEventManager.InvokeEventReceivers[ReceiverType](Byte[] userTokenBytes, Guid tranLockerId, RunEventReceiver runEventReceiver, Object receivers, Object properties, Boolean checkCancel) at Microsoft.SharePoint.SPEventManager.ExecuteItemEventReceivers(Byte[]& userToken, Guid& tranLockerId, Object& receivers, ItemEventReceiverParams& itemEventParams, Object& changedFields, EventReceiverResult& eventResult, String& errorMessage) at Microsoft.SharePoint.Library.SPRequestInternalClass.GetFileAsStream(String bstrUrl, String bstrWebRelativeUrl, Boolean bHonorLevel, Byte iLevel, OpenBinaryFlags grfob, String bstrEtagNotMatch, String& pEtagNew, String& pContentTagNew) at Microsoft.SharePoint.Library.SPRequest.GetFileAsStream(String bstrUrl, String bstrWebRelativeUrl, Boolean bHonorLevel, Byte iLevel, OpenBinaryFlags grfob, String bstrEtagNotMatch, String& pEtagNew, String& pContentTagNew) at Microsoft.SharePoint.SPFile.GetFileStreamCore(OpenBinaryFlags openOptions, String etagNotMatch, String& etagNew, String& contentTagNew) at Microsoft.SharePoint.SPFile.GetFileStream(OpenBinaryFlags openOptions, String etagNotMatch, String& etagNew, String& contentTagNew) at Microsoft.SharePoint.SPFile.OpenBinaryStream() at Microsoft.SharePoint.Publishing.BlobCache.CreateFile(String tempFileName, SPFile file, BlobCacheEntry target, Int32 chunkSize) at Microsoft.SharePoint.Publishing.BlobCache.c__DisplayClass34.c__DisplayClass37.b__31() at Microsoft.Office.Server.Diagnostics.FirstChanceHandler.ExceptionFilter(Boolean fRethrowException, TryBlock tryBlock, FilterBlock filter, CatchBlock catchBlock, FinallyBlock finallyBlock) at Microsoft.Office.Server.Diagnostics.ULS.SendWatsonOnExceptionTag(UInt32 tagID, ULSCatBase categoryID, String output, Boolean fRethrowException, TryBlock tryBlock, CatchBlock catchBlock, FinallyBlock finallyBlock) at Microsoft.SharePoint.Publishing.BlobCache.c__DisplayClass34.b__30() at Microsoft.SharePoint.SPSecurity.c__DisplayClass4.b__2() at Microsoft.SharePoint.Utilities.SecurityContext.RunAsProcess(CodeToRunElevated secureCode) at Microsoft.SharePoint.SPSecurity.RunWithElevatedPrivileges(WaitCallback secureCode, Object param) at Microsoft.SharePoint.SPSecurity.RunWithElevatedPrivileges(CodeToRunElevated secureCode) at Microsoft.SharePoint.Publishing.BlobCache.FetchItemFromWss(Uri path, Boolean isHostNamedSite) at Microsoft.SharePoint.Publishing.BlobCache.RewriteUrl(Object sender, EventArgs e, Boolean preAuthenticate) at Microsoft.SharePoint.Publishing.PublishingHttpModule.AuthorizeRequestHandler(Object sender, EventArgs ea) at System.Web.HttpApplication.SyncEventExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously) at System.Web.HttpApplication.PipelineStepManager.ResumeSteps(Exception error) at System.Web.HttpApplication.BeginProcessRequestNotification(HttpContext context, AsyncCallback cb) at System.Web.HttpRuntime.ProcessRequestNotificationPrivate(IIS7WorkerRequest wr, HttpContext context) at System.Web.Hosting.PipelineRuntime.ProcessRequestNotificationHelper(IntPtr managedHttpContext, IntPtr nativeRequestContext, IntPtr moduleData, Int32 flags) at System.Web.Hosting.PipelineRuntime.ProcessRequestNotification(IntPtr managedHttpContext, IntPtr nativeRequestContext, IntPtr moduleData, Int32 flags) at System.Web.Hosting.PipelineRuntime.ProcessRequestNotificationHelper(IntPtr managedHttpContext, IntPtr nativeRequestContext, IntPtr moduleData, Int32 flags) at System.Web.Hosting.PipelineRuntime.ProcessRequestNotification(IntPtr managedHttpContext, IntPtr nativeRequestContext, IntPtr moduleData, Int32 flags)

Please share your thought on this .

Wednesday, June 5, 2013

cache user accounts

For FBA web application using claims, we should specifically use FBA user like 0#.f|abc|username for SuperReader and SuperUser accounts.

Choose two such accounts , preferably new one.

Visit user policy for web application under central admin

With option all zones, give superUser Full Control and SuperReader Read rights.

Set superReader and SuperUser fr caching by below mentioned powershell command :

$myWebApp = Get-SPWebApplication -Identity "<WebApplication>"

$myWebApp.Properties["portalsuperuseraccount"] = "<SuperUser>"

$myWebApp.Properties["portalsuperreaderaccount"] = "<SuperReader>"

$myWebApp.Update()

Now go for one IISRESET

Choose two such accounts , preferably new one.

Visit user policy for web application under central admin

With option all zones, give superUser Full Control and SuperReader Read rights.

Set superReader and SuperUser fr caching by below mentioned powershell command :

$myWebApp = Get-SPWebApplication -Identity "<WebApplication>"

$myWebApp.Properties["portalsuperuseraccount"] = "<SuperUser>"

$myWebApp.Properties["portalsuperreaderaccount"] = "<SuperReader>"

$myWebApp.Update()

Now go for one IISRESET

when blob cache must be invalidated by framework ?

As a general rule any kind of change in file content or permissions should force the cache to be rebuilt.

When a change is observed , file is removed from indexes.

Ref : http://technet.microsoft.com/en-us/library/ee424404(v=office.14).aspx

You may also like:

Include specific folder content in BlobCache SharePoint

System.UnauthorizedAccessException to BlobCache Folder

Getting multiple hits to files after enabling BLOB cache

When a change is observed , file is removed from indexes.

| Operation | Effect on BLOB Cache |

| Editing a file that is BLOB cached | The cached file is removed from the cache. |

| Deleting a file that is BLOB cached | The cached file is removed from the cache. |

| Deleting a file that is not BLOB cached | All cached files in the list containing the file that was deleted will be removed from the cache |

| Deleting a folder in a list | All cached files in the list containing the folder that was deleted will be removed from the cache |

| Renaming or Deleting a list | All cached files that were in the list will be removed from the cache |

Making any changes to a list

| All cached files that were in the list will be removed from the cache |

| Renaming or Deleting a web | All cached entries in the web are removed from the cache |

| Adding or removing permissions to a web, changing inheritance, adding, updating, or deleting roles | All cached entries in the web are removed from the cache |

| Deleting a site collection | All cached entries contained in the site collection are removed from the cache |

| Modifying User Policy on the web application | Entire cache is abandoned, a new cache folder is started |

Ref : http://technet.microsoft.com/en-us/library/ee424404(v=office.14).aspx

You may also like:

Include specific folder content in BlobCache SharePoint

System.UnauthorizedAccessException to BlobCache Folder

Getting multiple hits to files after enabling BLOB cache

Getting multiple hits to files after enabling BLOB cache

SharePoint Blob Cache is capable of responding to partial content requests.

For large file size client application like media player can decide to send partial requests to server(you ca see requests with status code 206 in IIS logs). SharePoint Server ignores partial content requests and responds to these requests with the entire file , if blob cache is disabled.

So quite possible you get these multiple requests for large files , which were earlier being sent to browser in single request, but since now you have blob cache support , they are divided in to chunk requests.

You may also like:

Include specific folder content in BlobCache SharePoint

System.UnauthorizedAccessException to BlobCache Folder

For large file size client application like media player can decide to send partial requests to server(you ca see requests with status code 206 in IIS logs). SharePoint Server ignores partial content requests and responds to these requests with the entire file , if blob cache is disabled.

So quite possible you get these multiple requests for large files , which were earlier being sent to browser in single request, but since now you have blob cache support , they are divided in to chunk requests.

You may also like:

Include specific folder content in BlobCache SharePoint

System.UnauthorizedAccessException to BlobCache Folder

Tuesday, June 4, 2013

System.UnauthorizedAccessException to BlobCache Folder

This is a common scenario that we use blobcache in multiple application hosted under same server, but they are running under different app pool /app pool accounts.

This can even make your server slow or stop responding with access denied message for blob cache folder.

Here are few work arounds you can go for :

References:

http://technet.microsoft.com/en-us/library/cc263445.aspx

http://support.microsoft.com/kb/2015895

You may also like:

Include specific folder content in BlobCache SharePoint

Getting multiple hits to files after enabling BLOB cache

This can even make your server slow or stop responding with access denied message for blob cache folder.

Here are few work arounds you can go for :

- For each web app , use new root folder in drive.

- Give WSS_WPG full access on all these folders.

- Use same app pool account for all web applications using blobcache.

References:

http://technet.microsoft.com/en-us/library/cc263445.aspx

http://support.microsoft.com/kb/2015895

You may also like:

Include specific folder content in BlobCache SharePoint

Getting multiple hits to files after enabling BLOB cache

SharePoint 2013 - Authentication, authorization, and security

- User sign-in

- Classic-mode authentication is deprecated and manged only by powershell , now claims is the default one. PowerShellcmdlet called Convert-SPWebApplication can be used to migrate accounts , MigrateUsers method is deprecated Ref : http://msdn.microsoft.com/en-us/library/gg251985.aspx

- Requirement to register claims providers is eliminated

- SharePoint 2013 Preview tracks FedAuth cookies in the new distributed cache service using Windows Server AppFabric Caching.

- Better log management and depth of logs .

- Services and app authentication

- for more info on app principal , please visit Build apps for SharePoint.

- server-to-server security token service (STS) provides access tokens for server-to-server authentication .

Ref : http://msdn.microsoft.com/en-us/library/ms457529.aspx

You may also like:

Authorization/Authentication

Sunday, June 2, 2013

my website does not work well with newly released version of IE

This is a very common scenario where you face n number of issues with newer version of browsers

temporary solution, tweak in your web.config like :

now here is a catch , you might face few issues with SharePoint provided black box units like search crawls. You might want to omit clear tag to avoid such situations.

You may also like:

SharePoint 2010 Enterprise Search | SharePoint Crawl Exceptional Behaviour

temporary solution, tweak in your web.config like :

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<system.webServer>

<httpProtocol>

<customHeaders>

<clear />

<add name="X-UA-Compatible" value="IE=EmulateIE7" />

</customHeaders>

</httpProtocol>

</system.webServer>

</configuration>now here is a catch , you might face few issues with SharePoint provided black box units like search crawls. You might want to omit clear tag to avoid such situations.

You may also like:

SharePoint 2010 Enterprise Search | SharePoint Crawl Exceptional Behaviour

Labels:

SharePoint 2007,

SharePoint 2010,

SharePoint 2013

Query : Not able to open central Admin

On every page in central admin I getting below mentioned error :

System.InvalidOperationException: Post cache substitution is not compatible with modules in the IIS integrated pipeline that modify the response buffers. Either a native module in the pipeline has modified an HTTP_DATA_CHUNK structure associated with a managed post cache substitution callback, or a managed filter has modified the response. at System.Web.HttpWriter.GetIntegratedSnapshot(Boolean& hasSubstBlocks, IIS7WorkerRequest wr) at System.Web.HttpResponse.GetSnapshot() at System.Web.Caching.OutputCacheModule.OnLeave(Object source, EventArgs eventArgs) at System.Web.HttpApplication.SyncEventExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously)

And

Error initializing Safe control - Assembly:Microsoft.Office.SharePoint.ClientExtensions, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71e9bce111e9429c TypeName: Microsoft.Office.SharePoint.ClientExtensions.Publishing.TakeListOfflineRibbonControl Error: Could not load type 'Microsoft.Office.SharePoint.ClientExtensions.Publishing.TakeListOfflineRibbonControl' from assembly 'Microsoft.Office.SharePoint.ClientExtensions, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71e9bce111e9429c'.

What could be the possible reason behind this ?

You may also like:

MOSS 2007 Troubleshooting Guide

Monitor traffic b/w SQL server and front end

System.InvalidOperationException: Post cache substitution is not compatible with modules in the IIS integrated pipeline that modify the response buffers. Either a native module in the pipeline has modified an HTTP_DATA_CHUNK structure associated with a managed post cache substitution callback, or a managed filter has modified the response. at System.Web.HttpWriter.GetIntegratedSnapshot(Boolean& hasSubstBlocks, IIS7WorkerRequest wr) at System.Web.HttpResponse.GetSnapshot() at System.Web.Caching.OutputCacheModule.OnLeave(Object source, EventArgs eventArgs) at System.Web.HttpApplication.SyncEventExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously)

And

Error initializing Safe control - Assembly:Microsoft.Office.SharePoint.ClientExtensions, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71e9bce111e9429c TypeName: Microsoft.Office.SharePoint.ClientExtensions.Publishing.TakeListOfflineRibbonControl Error: Could not load type 'Microsoft.Office.SharePoint.ClientExtensions.Publishing.TakeListOfflineRibbonControl' from assembly 'Microsoft.Office.SharePoint.ClientExtensions, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71e9bce111e9429c'.

What could be the possible reason behind this ?

You may also like:

MOSS 2007 Troubleshooting Guide

Monitor traffic b/w SQL server and front end

Saturday, June 1, 2013

Offset Today in SPQuery Example

There is a virtual situation :

You have no archival / expiration policies setup for your list and on and off you want to delete everything older than 30 days . What you will do ?

This sample power shell solves your purpose and fulfill example for offset parameter in powershell :

$WebURL = "http://mysitecollection:100/myWeb";

$spWeb = Get-SPWeb -Identity $WebURL;

$sList = $spWeb.GetList("/myWeb/Lists/myList");

write-host $sList.Title;

$camlQuery = "<Where><Lt><FieldRef Name='Modified' /><Value Type='DateTime'><Today OffsetDays=-30 /></Value></Lt></Where>";

$spQuery = new-object Microsoft.SharePoint.SPQuery ;

$spQuery.Query = $camlQuery ;

$spQuery.ViewFields = "<FieldRef Name='ID' />";

$ToBeDeleted = $sList.GetItems() ;

$ToBeDeleted = $sList.GetItems($spQuery) ;

$i=0;

$ToBeDeleted | ForEach-Object {

$i++;

Write-Host $_.ID -foregroundcolor cyan

$deaditem= $sList.GetItemById($_.ID); # if you wish you may decide to recycle instead to recycle bin

$deaditem.Delete();

}

write-host $i;

You have no archival / expiration policies setup for your list and on and off you want to delete everything older than 30 days . What you will do ?

This sample power shell solves your purpose and fulfill example for offset parameter in powershell :

$WebURL = "http://mysitecollection:100/myWeb";

$spWeb = Get-SPWeb -Identity $WebURL;

$sList = $spWeb.GetList("/myWeb/Lists/myList");

write-host $sList.Title;

$camlQuery = "<Where><Lt><FieldRef Name='Modified' /><Value Type='DateTime'><Today OffsetDays=-30 /></Value></Lt></Where>";

$spQuery = new-object Microsoft.SharePoint.SPQuery ;

$spQuery.Query = $camlQuery ;

$spQuery.ViewFields = "<FieldRef Name='ID' />";

$ToBeDeleted = $sList.GetItems() ;

$ToBeDeleted = $sList.GetItems($spQuery) ;

$i=0;

$ToBeDeleted | ForEach-Object {

$i++;

Write-Host $_.ID -foregroundcolor cyan

$deaditem= $sList.GetItemById($_.ID); # if you wish you may decide to recycle instead to recycle bin

$deaditem.Delete();

}

write-host $i;

Labels:

powershell,

SharePoint 2007,

SharePoint 2010,

SharePoint 2013

Authorization/Authentication

Authentication is determining the identity of a principal trying to log in via IIS . When a principal tries to authenticate to a system, credentials are provided to verify the principal's identity.

Microsoft Online IDs are issued and maintained by Microsoft - like ids for Office 365, Hotmail, Sky Drive, and Live account.Using a Microsoft ID, a user can authenticate to various systems using same credentials.

Federation Identity (Single Sign-On) is a mechanism for allowing users within your organization to use their standard Active Directory corporate username and password toaccess Office 365.Federation with Office 365 requires the use of Active Directory Federation Services (ADFS)2.0.

Authorization is verifying an authenticated user's access to a application as per Access Control List (ACL).When a user tries to access the SharePoint site collection, their username is checked against the permissions of the site via SharePoint Groups or directly. If no permission is been granted, access is denied .

You may also like:

Friday, May 31, 2013

Include specific folder content in BlobCache SharePoint

It is quite possible that you instruct blob cache framework to include only specific SP document Libraries . ( I have mentioned it as document libraries , as you must be aware it does not work well with custom folders created using designer etc)

Suppose I have few heavy .jpeg and .dhx in a spdocumentLibrary called HeavyContent .

I want to cache all .pdf , .doc ,.docx ,.flv,.f4v ,.swf in my web application , but .jpeg and .dhx are the one only from spdoc lib "HeavyContent"

Search for a tag similar to below mentioned in web.config and edit the path parameter. :

This will mark items as specified by path parameter regex for 24 hrs , to be cached.

\.(doc|docx|pdf|swf|flv|f4v)$ <----> everything which ends with extension mentioned

| <----> or

HeavyContent.*\.(dhx|jpeg)$ <----> content inside HeavyContent ending with dhx or jpeg

Disclaimer : I am not sure how performance will be affected by making path parameter complex.

Now suppose your IIS does not support some mime types like dhx and f4v. You have two options :

Option 1 : add mime type at server level in IIS.

Option 2 : use browserFileHandling = "Nosniff" in the webconfig section discussed above. [ Ref : http://blogs.msdn.com/b/ie/archive/2008/09/02/ie8-security-part-vi-beta-2-update.aspx ]

$webAppall = Get-SPWebApplication

foreach ($_.URL in $webAppall) {

$webApp = Get-SPWebApplication $_.URL

[Microsoft.SharePoint.Publishing.PublishingCache]::FlushBlobCache($webApp)

Write-Host “Flushed the BLOB cache for:” $_.URL

}

Suppose I have few heavy .jpeg and .dhx in a spdocumentLibrary called HeavyContent .

I want to cache all .pdf , .doc ,.docx ,.flv,.f4v ,.swf in my web application , but .jpeg and .dhx are the one only from spdoc lib "HeavyContent"

Search for a tag similar to below mentioned in web.config and edit the path parameter. :

<BlobCache location="C:\myBlob\Public" path="(\.(doc|docx|pdf|swf|flv|f4v)$|HeavyContent.*\.(dhx|jpeg)$)" maxSize="10" max-age="86400" enabled="true" />This will mark items as specified by path parameter regex for 24 hrs , to be cached.

\.(doc|docx|pdf|swf|flv|f4v)$ <----> everything which ends with extension mentioned

| <----> or

HeavyContent.*\.(dhx|jpeg)$ <----> content inside HeavyContent ending with dhx or jpeg

Disclaimer : I am not sure how performance will be affected by making path parameter complex.

Mime Types with BlobCache

Now suppose your IIS does not support some mime types like dhx and f4v. You have two options :

Option 1 : add mime type at server level in IIS.

Option 2 : use browserFileHandling = "Nosniff" in the webconfig section discussed above. [ Ref : http://blogs.msdn.com/b/ie/archive/2008/09/02/ie8-security-part-vi-beta-2-update.aspx ]

How to Flushing the BLOB cache

- IISRESET [Recommended : Increase the startup and shutdown time limits on the web application to accommodate the extra time it takes to initialize or serialize the cache index for very large BLOB caches]

- Powershell :

Write-Host -ForegroundColor White ” – Enabling SP PowerShell cmdlets…”

If ((Get-PsSnapin |?{$_.Name -eq “Microsoft.SharePoint.PowerShell”})-eq $null)

{

$PSSnapin = Add-PsSnapin Microsoft.SharePoint.PowerShell -ErrorAction SilentlyContinue | Out-Null

}$webAppall = Get-SPWebApplication

foreach ($_.URL in $webAppall) {

$webApp = Get-SPWebApplication $_.URL

[Microsoft.SharePoint.Publishing.PublishingCache]::FlushBlobCache($webApp)

Write-Host “Flushed the BLOB cache for:” $_.URL

}

3. change enable to false in web.config , change location parameter , set enable to true

You may also like:

Watson bucket parameters

Anybody help to understand , what this means in ULS logs ?

------>

05/29/2013 08:22:02.49 w3wp.exe (0x1D38) 0x0DD4 SharePoint Server Unified Logging Service c91s Monitorable Watson bucket parameters: SharePoint Server 2010, ULSException14, 81eed5e0 "web content management", 0e00129b "14.0.4763.0", e9185677 "system.web", 0200c627 "2.0.50727.0", 4ef6c43b "sun dec 25 01:35:39 2011", 00002164 "00002164", 00000083 "00000083", 454b2bb9 "httpexception", 386e3162 "8n1b" 47e538f0-8fa3-4d3b-8010-1988b2590981

Sunday, April 21, 2013

How to pass XSL variable in to a Javascript function?

I found a good post which helps to play around with xslt in data viewer webparts :

How to pass XSL variable in to a Javascript function?

Pass XSL variable to JavaScript function

How to pass XSL variable in to a Javascript function?

Pass XSL variable to JavaScript function

Sunday, April 14, 2013

Create Variation Hierarchies with Machine Translation Enabled

Prerequisites

Before we start creating variations in SP 2013 and test machine translations, below mentioned two Managed services must be present:

• Machine Translation Service

• User Profile Service Application

You can use configuration wizard on home page of central admin (http://centralAdmin/configurationwizards.aspx) to create all services.

• Application pool account used for machine translation service must have full control on User profile services. This application pool account must have permissions on http://servername/TargetSiteCollectionPath/Translation%20Status/

• Download latest certificates from https://corp.sts.microsoft.com/Onboard/CertRenewal.html and install on SharePoint farm.

• Visit https://www.microsoft.com ( run as administrator) > Page properties > Certificates > Install certificates on the SharePoint Farm

Create Hierarchies

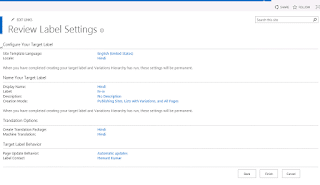

3. Create a target / child variation label :

Please make sure you have allowed machine translations for this target:

4. Now click on link to create Variation hierarchy :

5. Either wait for Variations Create Hierarchies Job Definition and Variations Propagate Sites and Lists Timer Job to run as scheduled or run them manually. You can verify what actions these jobs are taking @ http://servername/sites/var/VariationLogs/AllItems.aspx

6. After variations are created http://servername/sites/var/_layouts/15/VariationLabels.aspx looks like :

Publish and approve this page.

Manually run Variations Propagate Page Job Definition or let this job run as scheduled.

Visit Variation Logs (http://servername/sites/var/VariationLogs/AllItems.aspx) to verify page is being propagated to target variation.

Visit the test page and click on machine translation tab :

Output:

Publish and Approve the page after required edits.

You may also like:

SharePoint 2013 features overview

Before we start creating variations in SP 2013 and test machine translations, below mentioned two Managed services must be present:

• Machine Translation Service

• User Profile Service Application

You can use configuration wizard on home page of central admin (http://centralAdmin/configurationwizards.aspx) to create all services.

• Application pool account used for machine translation service must have full control on User profile services. This application pool account must have permissions on http://servername/TargetSiteCollectionPath/Translation%20Status/

• Download latest certificates from https://corp.sts.microsoft.com/Onboard/CertRenewal.html and install on SharePoint farm.

• Visit https://www.microsoft.com ( run as administrator) > Page properties > Certificates > Install certificates on the SharePoint Farm

Create Variations in SharePoint 2013

Create publishing portal from central admin (http://centraladmin/_admin/createsite.aspx)

Create Hierarchies

- Visit In site settings of root for site collection and go to Variation Labels (http://servername/sites/var/_layouts/15/VariationLabels.aspx) :

- Create root variation label :

3. Create a target / child variation label :

Please make sure you have allowed machine translations for this target:

4. Now click on link to create Variation hierarchy :

5. Either wait for Variations Create Hierarchies Job Definition and Variations Propagate Sites and Lists Timer Job to run as scheduled or run them manually. You can verify what actions these jobs are taking @ http://servername/sites/var/VariationLogs/AllItems.aspx

6. After variations are created http://servername/sites/var/_layouts/15/VariationLabels.aspx looks like :

Machine translation

Create page on root variation

Publish and approve this page.

Manually run Variations Propagate Page Job Definition or let this job run as scheduled.

Visit Variation Logs (http://servername/sites/var/VariationLogs/AllItems.aspx) to verify page is being propagated to target variation.

Submit page for Translation on Target Variation

Visit the test page and click on machine translation tab :

Output:

Publish and Approve the page after required edits.

You may also like:

SharePoint 2013 features overview

Thursday, March 14, 2013

Refiners in SharePoint 2013 Search

We will try to understand how refiners work in SharePoint 2013 here.

Refiner webpart passes a Jason object in url .

1. Go to view all site content ( http://servername/sites/var/_layouts/15/viewlsts.aspx)

2. Create a Custom List similar to mentioned below :

Column TermSetColumnPOC ( Allow multiple values) is linked to a termset withTerms :

Term1 , Term2 , Term3 , Term4 , Term5 ,Term6 , Term7 , Term8 ,Term9

I want my search result page to display results when "Term8" is present in column TermSetColumnPOC or NormalColumnPOC . I will also provide a filter , which will hide the results which don't have "Term8" present in column TermSetColumnPOC .

2. Populate data in custom List :

Searching "Term1" should give items hk1 , hk4 , hk7, hk9

Searching "Term8" should give hk1, hk6,hk8

Now applying Refiner on "TermSetColumnPOC" equal to value "Term8" should give only hk1,hk8

3. Create a Content Source under Search administration in central admin which contain only your test web application to be crawled . This is not a mandate , but will expedite this process of testing refiners , as you will drastically reduce crawling time.

Run a full crawl on this search content source.

4. Search Service application > Search Schema

Create a managed property "TermSetColumnPOCManaged" as mentioned below

5. Run full crawl again.

6. After this crawl is over , you can test refiners in your search center . I don't want one so , I will create my own simple page.

7. Enable site collection feature "Search Server Web Parts and Templates"

8. Create a simple web part page and insert below mentioned webpart on page

Search Box , Search results , Refinement

9. Under Webpart properties of refinement webpart choose TermSetColumnPOCManaged as one of the available refiners.

10 . Publish and approve this page .

11. Type "Term1" in search box or Type url http://......./search1.aspx#k=Term1

11.1 Type "Term8" in search box or Type url http://......./search1.aspx#k=Term8

11.2 Type "Term8" in search box and Click on "Term8" in Refiners

( use url decode to understand what is being passed in url )

or

http://......./search1.aspx#Default={"k":"Term8","r":[{"n":"TermSetColumnPOCManaged","t":["\"ǂǂ5465726d38\""],"o":"and","k":false,"m":null}]}

( here equivalent json object is passed as parameter )

or

http://......./search1.aspx#Default={"k":"Term8","r":[{"n":"TermSetColumnPOCManaged","t":["\"Term8\""],"o":"and","k":false,"m":null}]}

( here equivalent json object is passed as parameter but with Term display value )

11.3 search "TermSetColumnPOCManaged:Term8" or

type url http://......./search1.aspx#k=TermSetColumnPOCManaged:Term8

Another example of JASON with Refiners and Search core result webpart :

On the same page Search "Term1" , and in Refiners , select "Term1"

or type url :

http://......./search1.aspx#Default={"k":"Term1","r":[{"n":"TermSetColumnPOCManaged","t":["\"Term1\""],"o":"and","k":false,"m":null}]}

The result in both ways :

One more example of jason with Search result page :

On the same page Search "Term1" , and in Refiners , select "Term9"

or type url :

http://......./search1.aspx#Default={"k":"Term1","r":[{"n":"TermSetColumnPOCManaged","t":["\"Term9\""],"o":"and","k":false,"m":null}]}

Result both ways comes to be :

You may also like:

SharePoint 2013 features overview

Refiner webpart passes a Jason object in url .

1. Go to view all site content ( http://servername/sites/var/_layouts/15/viewlsts.aspx)

2. Create a Custom List similar to mentioned below :

Column TermSetColumnPOC ( Allow multiple values) is linked to a termset withTerms :

Term1 , Term2 , Term3 , Term4 , Term5 ,Term6 , Term7 , Term8 ,Term9

I want my search result page to display results when "Term8" is present in column TermSetColumnPOC or NormalColumnPOC . I will also provide a filter , which will hide the results which don't have "Term8" present in column TermSetColumnPOC .

2. Populate data in custom List :

Searching "Term1" should give items hk1 , hk4 , hk7, hk9

Searching "Term8" should give hk1, hk6,hk8

Now applying Refiner on "TermSetColumnPOC" equal to value "Term8" should give only hk1,hk8

3. Create a Content Source under Search administration in central admin which contain only your test web application to be crawled . This is not a mandate , but will expedite this process of testing refiners , as you will drastically reduce crawling time.

Run a full crawl on this search content source.

4. Search Service application > Search Schema

Create a managed property "TermSetColumnPOCManaged" as mentioned below

5. Run full crawl again.

6. After this crawl is over , you can test refiners in your search center . I don't want one so , I will create my own simple page.

7. Enable site collection feature "Search Server Web Parts and Templates"

8. Create a simple web part page and insert below mentioned webpart on page

Search Box , Search results , Refinement

9. Under Webpart properties of refinement webpart choose TermSetColumnPOCManaged as one of the available refiners.

10 . Publish and approve this page .

11. Type "Term1" in search box or Type url http://......./search1.aspx#k=Term1

11.1 Type "Term8" in search box or Type url http://......./search1.aspx#k=Term8

11.2 Type "Term8" in search box and Click on "Term8" in Refiners

( use url decode to understand what is being passed in url )

or

http://......./search1.aspx#Default={"k":"Term8","r":[{"n":"TermSetColumnPOCManaged","t":["\"ǂǂ5465726d38\""],"o":"and","k":false,"m":null}]}

( here equivalent json object is passed as parameter )

or

http://......./search1.aspx#Default={"k":"Term8","r":[{"n":"TermSetColumnPOCManaged","t":["\"Term8\""],"o":"and","k":false,"m":null}]}

( here equivalent json object is passed as parameter but with Term display value )

11.3 search "TermSetColumnPOCManaged:Term8" or

type url http://......./search1.aspx#k=TermSetColumnPOCManaged:Term8

Another example of JASON with Refiners and Search core result webpart :

On the same page Search "Term1" , and in Refiners , select "Term1"

or type url :

http://......./search1.aspx#Default={"k":"Term1","r":[{"n":"TermSetColumnPOCManaged","t":["\"Term1\""],"o":"and","k":false,"m":null}]}

The result in both ways :

One more example of jason with Search result page :

On the same page Search "Term1" , and in Refiners , select "Term9"

or type url :

http://......./search1.aspx#Default={"k":"Term1","r":[{"n":"TermSetColumnPOCManaged","t":["\"Term9\""],"o":"and","k":false,"m":null}]}

Result both ways comes to be :

You may also like:

SharePoint 2013 features overview

Friday, February 8, 2013

Asynchronous call to WebService / WCF using JQuery

In one of the implementations, we had to do asynchronous calls to multiple SharePoint Lists for better UI and user experience.

For better performance and managed solution, we exposed a custom web service which takes care of all the data manipulations and returns desired result set / exposes methods to do required operations.

Here is a Proof of concept code snippet which might be helpful to you to call web services using JQuery.Ajax :

$.ajax({

// type: Type, //GET or POST or PUT or DELETE verb

url: Uri, // Location of the service

// data: Data, //Data sent to server

dataType: DataType, //Expected data format from server

cache: false, // no-cache

success: function (msg) {//On Successfull service call

ServiceSucceeded(msg);

},

error: ServiceFailed// When Service call fails

});

Full Sample Code :

asynchronous-call-to-web-service_1

For better performance and managed solution, we exposed a custom web service which takes care of all the data manipulations and returns desired result set / exposes methods to do required operations.

Here is a Proof of concept code snippet which might be helpful to you to call web services using JQuery.Ajax :

$.ajax({

// type: Type, //GET or POST or PUT or DELETE verb

url: Uri, // Location of the service

// data: Data, //Data sent to server

dataType: DataType, //Expected data format from server

cache: false, // no-cache

success: function (msg) {//On Successfull service call

ServiceSucceeded(msg);

},

error: ServiceFailed// When Service call fails

});

Full Sample Code :

asynchronous-call-to-web-service_1

Saturday, January 12, 2013

finding features in a content database in SharePoint 2010 using PowerShell or tools

Sometimes we have a feature id and we want to know the places where it is active, even as a dummy one. Below mentioned script might be helpful.

http://get-spscripts.com/2011/06/removing-features-from-content-database.html

or

http://featureadmin.codeplex.com/downloads/get/290833

or

http://archive.msdn.microsoft.com/WssAnalyzeFeatures

http://get-spscripts.com/2011/06/removing-features-from-content-database.html

or

http://featureadmin.codeplex.com/downloads/get/290833

or

http://archive.msdn.microsoft.com/WssAnalyzeFeatures

Tuesday, January 8, 2013

SharePoint 2010 Enterprise Search | SharePoint Crawl ExceptionalBehaviour

Security Issues to be taken care while configuring SharePoint Search for Public facing Portals - SharePoint

When search crawler [SPSCrawl.asmx and sitedata.asmx ] comes to a SharePoint site , how it gets to know whether it's a SharePoint site or a normal site ?

There is a custom header defined by Microsoft on SharePoint web applications : Name : MicrosoftSharePointTeamServices ; Value like : 14.0.0.4762

It tells crawler to dig to the item levels in SharePoint Lists , treat target as SharePoint Site.....

What if this custom header is removed on target ? :--> Search crawler will crawl up to list level only . If we use fiddler, while crawling site collection you could observe , there is no call made to SPSCrawl.asmx and sitedata.asmx by the crawler. This web application will no more be treated as SharePoint Website by Search crawl.

Now to make your site secure , you want that hackers may not get these custom headers , but search crawlers need it .

There is a way out , let your search crawl be targeted to a different web application than the public facing one !!!! And on public facing website use <clear /> under http response headers to hide from external world internal server information.

You may also like:

my website does not work well with newly released version…

Question : what is arpirowupdater.hxx ?

When search crawler [SPSCrawl.asmx and sitedata.asmx ] comes to a SharePoint site , how it gets to know whether it's a SharePoint site or a normal site ?

There is a custom header defined by Microsoft on SharePoint web applications : Name : MicrosoftSharePointTeamServices ; Value like : 14.0.0.4762

It tells crawler to dig to the item levels in SharePoint Lists , treat target as SharePoint Site.....

What if this custom header is removed on target ? :--> Search crawler will crawl up to list level only . If we use fiddler, while crawling site collection you could observe , there is no call made to SPSCrawl.asmx and sitedata.asmx by the crawler. This web application will no more be treated as SharePoint Website by Search crawl.

Now to make your site secure , you want that hackers may not get these custom headers , but search crawlers need it .

There is a way out , let your search crawl be targeted to a different web application than the public facing one !!!! And on public facing website use <clear /> under http response headers to hide from external world internal server information.

You may also like:

my website does not work well with newly released version…

Question : what is arpirowupdater.hxx ?

Subscribe to:

Posts (Atom)